By Jana Talley

This installment of DUE Point features the Proofs Project, funded through NSF’s Improving Undergraduate STEM Education (IUSE) program. To facilitate the development of students’ logical reasoning skills in introductory proofs courses, the project team designed instructional strategies and materials to invoke epistemological obstacles. They then provided instructors with professional development to implement these products and restructure their curriculums. These initiatives have broadened the avenues through which students and instructors communicate logical reasoning to one another and are, therefore, expanding the effectiveness of introductory proofs courses. Ultimately, this work positions students to more easily matriculate into advanced coursework. I sat down with the PIs, Rachel Arnold and Anderson Norton, to learn how the project was designed and how it has impacted the learning experiences of students’ transitioning from computational coursework into proof writing.

Q: Give us a brief synopsis of the Proofs Project.

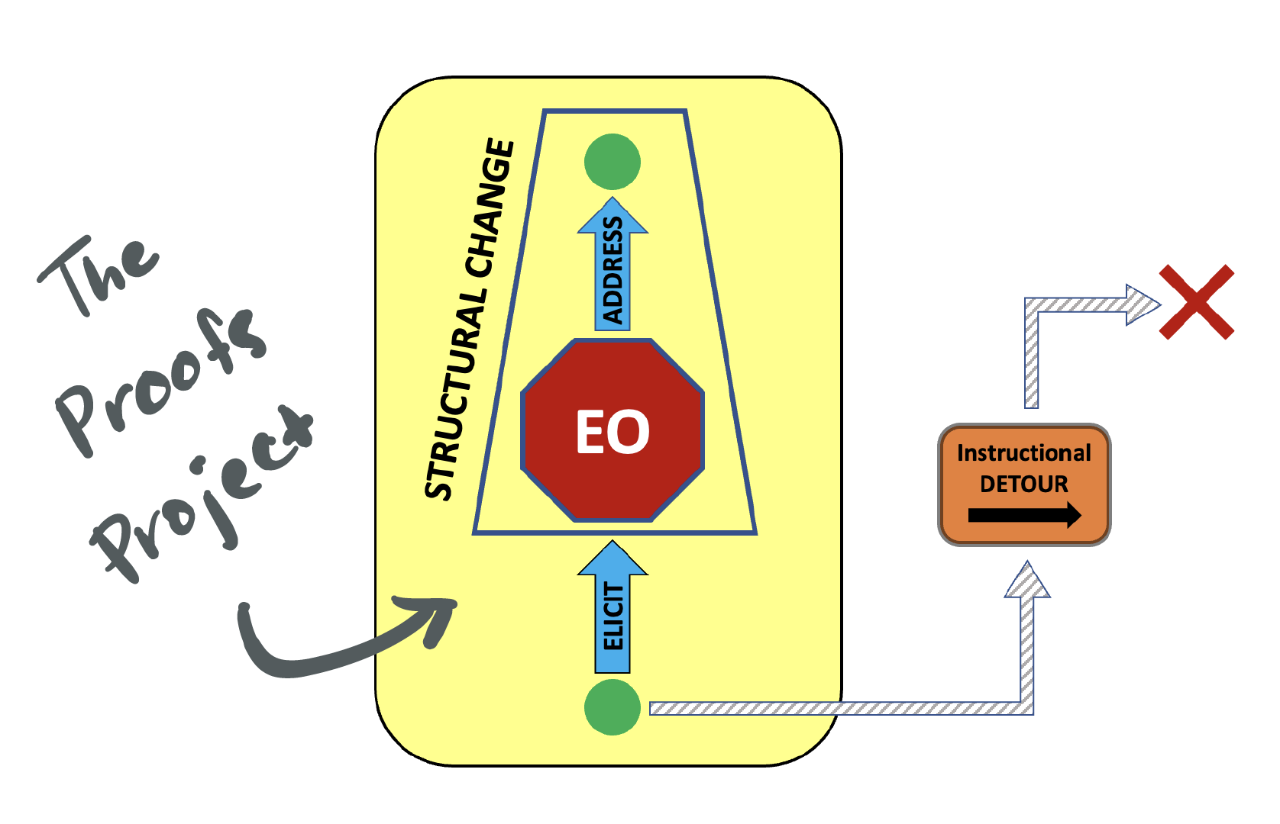

The Proofs Project goals are rooted in the need to transform introductory proofs courses to promote logical thinking. More specifically, the project aims to support teachers in carrying out instructional interactions that provide opportunities for students to develop the reasoning skills necessary for advanced STEM settings. These interactions empower instructors to evoke and address epistemological obstacles which prompt students to overcome cognitive challenges. Through guided, productive struggle, these tasks support students’ understanding of logical structures.

Q: What are the aspects of your proposal that likely persuaded NSF to the Proofs Project?

To gain an understanding of students’ logical reasoning and how instructional interventions can provoke students to communicate understanding in a variety of ways, we conducted small scale studies prior to submitting a proposal. We also delved into existing literature that spoke to how students approach logical reasoning and articulated the significance of epistemological obstacles in the growth of mathematical understanding. Notably, our initial proposal was not funded. While disappointing, it gave us time and space to continue examining data from our initial studies. This led us to clarify our project procedures, revise instructional strategies, and refine our research questions. Ultimately, we created a much more informed and detailed proposal. In some ways the journey taught us that simulating each phase of implementation is key to writing a successful proposal.

Q: What are the biggest takeaways for instructors that have utilized your materials in their classrooms?

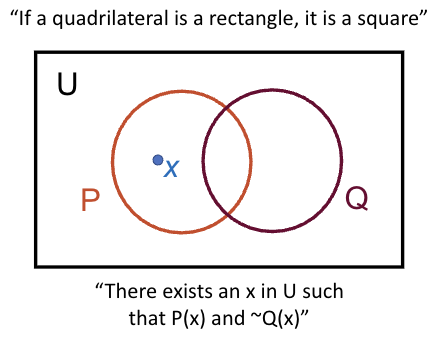

Unexpectedly, the use of Euler diagrams to explore logical implications was the most impactful teaching tool that came out of the Proofs Project. By visualizing mathematical relationships, students leverage their spatial reasoning to carry out logical transformations on mathematical statements.

Additionally, instructors involved with the project have experienced a reframing of their perspective on the ability of students to reason and learn proof writing techniques. They recognized how their own perspectives about how to learn mathematics had been interfering with their ability to interpret the unique ways that students communicate reasoning. Moreover, instructors became more attuned to identifying evidence of student understanding and began promoting the demonstration of reasoning in a variety of contexts.

It’s worth noting that students have acknowledged that they utilize their enhanced logical thinking skills beyond the proofs class. They recognize how having more mature reasoning skills improves their performance in other courses and even find themselves identifying logical flaws in social conversations.

Q: Tell us about your team.

Our work is supported by a host of valuable team members. It is important that we recognize the immense contributions of our graduate research assistants, post doctoral fellows, graduate students, and undergraduate students. For example, Joseph Antonides first engaged with the project as a post doctoral fellow who was enthusiastic about the spatial reasoning associated with Euler diagrams. He has been instrumental in the development of products and publications. One of our graduate students, Corinne Mitchell, collaborated with us to facilitate professional development workshops and disseminate our work through journal articles. Several other graduate and undergraduate students have used our data in their research training and went on to co-author papers and presentations at national conferences.

Q: In what ways has your project attended to NSF’s broader impact merit criteria?

On Virginia Tech’s campus, instructors have fully integrated the Proofs Project tools to center student thinking in applicable courses. Though many intended to implement limited portions of the content, the impact of new strategies on their teaching and student learning encouraged complete redesigns of their courses.

As the project took shape, we collaborated with local colleges and universities to provide their faculty with professional development on how to implement our products. Our network was then further expanded by a partnership with MAA. Through their Open Math program we have offered virtual week-long professional development to instructors across the country. Participants formed collaborations beyond their own campuses to design instructional activities by adapting our strategies and tools to their own students’ needs.

Perhaps the broadest impact is being realized through the availability of our research and ever-growing set of Proofs Project teaching modules found on the website. There, anyone can gain access to our research and materials packaged for their desired level of implementation. Our hope is that the site continues to expand and serve as a resource for all educators dedicated to supporting students as they cultivate logical reasoning skills.

Author’s Note:

If you would like to know more about this NSF Project, Addressing Cognitive and Instructional Challenges in Introductory Proofs Courses: A Gateway to Advanced STEM Studies (#2141626), contact the PIs, Dr. Rachel Arnold (rachel.arnold@vt.edu) or Dr. Anderson Norton (norton3@vt.edu).

Jana Talley is a Co-Editor of DUE Point and an associate professor in the Department of Mathematics and Statistical Sciences at Jackson State University.

You can also follow Jana Talley on: