By David Bressoud @dbressoud

As of 2024, new Launchings columns appear on the third Tuesday of the month.

Before Cauchy, integration was usually defined as the inverse process of differentiation. This creates some problems when then there is no nice, closed form for the anti-derivative. For example, asking Mathematica for the integral from 0 to x of sin t² produces the rather confusing

A little exploration reveals that FresnelS refers to the Fresnel function that is used in optics and which Mathematica defines as

In other words, after adjusting for the factor of π/2, it has just told you that

Not particularly useful.

To Lagrange, this was not a problem because he believed that every function could be written as a power series, and one can always integrate a power series. In particular, sin(t²) is just the power series for sin x with x replaced by t²,

It follows that

The problem, as mathematicians began to discover in the early 1800s, is that not every function can be written as a power series. In 1821, Cauchy produced the example (see Figure 1),

There is no questions that this function is continuous and differentiable when x is not zero, and it is not hard to verify that

so this function is continuous at 0. The proof that all derivatives of f exist at x=0 and in fact are zero there relies on two observations. First, each derivative of e^{-1/x^2} is of the form a polynomial in x⁻¹ times e^{-1/x^2}. If the kth derivative of f at 0 is zero, then

which is also a polynomial in x⁻¹ times e^{-1/x^2}. Second, for any non-negative integer k

To prove this, we invoke l'hospital's rule for an infinite limit over an infinite limit,

If k is even, we wind up with

If k is odd, we wind up with

The power series for e^{-1/x^2} expanded about x=0 would have to be

This is valid at x=0, but note that while e^{-1/x^2} is extremely flat near x=0, it is, in fact, strictly positive when x is not zero. This power series is only valid at x=0. We cannot integrate e^{-1/x^2} by integrating its power series.

There is now no way we can define the integral as the inverse of differentiation. Cauchy realized he needed a very different way of defining the integral. He chose to start with the definite integral.

While the concept of evaluating an integral over a specific interval can be traced back to Newton and Leibniz, Cauchy credits Joseph Fourier with inventing the idea of attaching the upper and lower limits to the integral sign,

By creating a rigorous definition for exactly what this means, Cauchy is able to prove that the definite integral over a closed and bounded interval exists for every continuous function, even the very strange function e^{-1/x^2} defined above.

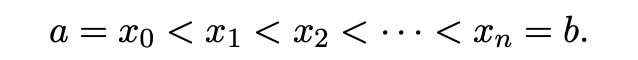

To understand his definition, we begin by ``partitioning'' the closed interval, breaking it into pieces,

Though not the exact approach taken by Cauchy, the best way to understand his definition of the definite integral was described by Vito Volterra in 1881, based on an idea of Gaston Darboux. It is to consider the least upper bound, Mᵢ, and greatest lower bound, mᵢ, on the i th interval and consider upper and lower limits on the possible value of the definite integral,

If there is a single value V that lies below every upper bound and above every lower bound, then that is the value of the definite integral. (See Figure 2 where the difference between the upper and lower bounds is the combined area of the rectangles with diagonal hatching. Note that as the subintervals get shorter, this area decreases.)

Given a continuous function , then as explained in the Launchings column ``On Continuity'', if we choose the subintervals short enough that Mᵢ and mᵢ differ by less than δ, then the distance between the upper and lower bound on the integral is

Since δ can be brought as close to zero as we wish, there can be only one value that lies between these bounds. That is the value of the definite integral.

There is just one problem with this proof: If we specify how close the upper and lower bounds must be, how do we know that there is just one value of δ that brings Mᵢ and mᵢ this close on every subinterval? This is not a problem that occurred to Cauchy and would not be tackled until 1852, more than 30 years after Cauchy defined the definite integral. It is a story that will be told in a later posting on Launchings. In the meantime, Cauchy faced an obvious and immediate problem: If the integral is defined as the limit of a summation, how do we connect it to the fact that in many cases integration can be understood as the reverse of differentiation? That will be answered in next month's Launchings.

David Bressoud is DeWitt Wallace Professor Emeritus at Macalester College and former Director of the Conference Board of the Mathematical Sciences. Information about him and his publications can be found at davidbressoud.org