By Lew Ludwig

"The deep breath before the plunge" - a sentiment Gandalf shares with Pippin in the Return of the King. This phrase often runs through my mind as the last days of summer break wind down and the new school year is about to begin. To be clear, I do not see the new year as heading off to battle as with Gandalf's reference, but heading into our third fall in the presence of generative AI and the relentless release of newer more powerful models does make me look at this semester with a bit more apprehension than usual.

This isn't our first quest into AI-enhanced teaching territory. We're seasoned travelers now, having weathered the initial shock of ChatGPT's arrival and the subsequent scramble to understand what it all meant. Yet each new semester feels like standing at a familiar crossroads with an unfamiliar path ahead.

I appreciated the number of notes from readers intending to try the TILT framework I laid out in the last post. It is a very practical use of AI to actually engage our students without changing our learning outcomes or bending to the technology as some new shiny toy that we must try. The response reinforced my belief that we're ready to move beyond reactive approaches toward more intentional pedagogical choices.

The False Start/Wrong Path

This got me thinking about AI's role in the classroom, something we've all been struggling with. In recent pre-workshop surveys, numerous registrants ask something like "How can I – or my students–effectively use AI in the classroom to support learning?" I appreciate how the tone has shifted from "how do I AI-proof my class" – an unsustainable exercise as I've come to believe.

However, as AI becomes a larger part of our world, "How can I use AI in my classroom?" is the wrong question. This question, rooted in a genuine desire to innovate and engage learners, puts the technology at the center of our planning. Like eager adventurers who've spotted something shiny along the roadside, we've been tempted to chase after this new AI technology, letting it dictate our route rather than staying true to our intended destination.

But experienced educators know that following every interesting detour is a recipe for wandering in circles. We need to chart our course first, then decide when these new paths might actually serve our journey.

Establishing Your True Destination

Instead of asking how can I use AI, we should first ask "What are the specific learning outcomes for our class?" If you are like me–someone whose teaching has developed over years of trial-and-error and borrowing from or improving on others' teaching–learning outcomes can seem like a daunting task. I have a gut sense of why I want the students to do what I ask, but I have a hard time articulating that.

So let's start small. Instead of the learning objectives for an entire class, let's focus on one well-used assignment. Let's suppose we have an assignment on related rates. What are the specific learning outcomes for this assignment? "Because it's on the department topic list for the course," is probably not a specific learning outcome.

Finding a Knowledgeable Companion

Interestingly, we can use AI to help here - think of it as consulting a wise guide who's traveled these paths before. Start by giving it context:

PROMPT: You are an expert in undergraduate mathematics pedagogy, particularly the calculus sequence. And you are well versed in the MAA's Instructional Practice Guide, as well as pedagogical techniques like Bloom's Taxonomy, Fink's Backward Design, and the SAMR model.

Next, share your assignment–either cut and paste or upload as a file–to the AI model, then ask:

PROMPT: What are the specific learning outcomes for this assignment?

I often find people are surprised by what they get. AI often shares the obvious objectives, but often catches smaller, nuanced ones that we may have overlooked. If you think you are missing an objective or outcome, such as "demonstrate the ability to communicate effectively," ask AI to help weave that into the assignment.

Scouting the Terrain Ahead

Once you are satisfied with your learning outcomes - once you know where you're headed - it's time to scout the path ahead. See whether AI might help or hinder the assignment:

PROMPT: How might students use AI tools while working on this assignment?

This isn't about permission or prohibition yet; it's about exploring the landscape. What does the terrain look like? Where might your students naturally turn to AI for help? Understanding the territory helps you prepare for what lies ahead.

Identifying the Perils Along the Path

But we can't let our fascination with this shiny new technology blind us to potential dangers. We should also consider where things might go wrong:

PROMPT: How might AI undercut the goals of this assignment? How could you mitigate this?

This question forces us to look honestly at the potential downsides. Where might AI lead students away from the learning you've designed? What apparent shortcuts might actually bypass the growth you're hoping for?

Finding the Hidden Passages

Finally, with a clear destination and awareness of both terrain and pitfalls, we can ask:

PROMPT: How might AI enhance the assignment?

Now we're looking for those hidden passages that might make the journey richer, not just easier. How might AI help students go deeper, see connections they'd miss otherwise, or engage with the material in ways that amplify rather than replace their thinking?

The Fellowship Approach

And keep in mind, it is totally fine to leave AI out of an assignment entirely - as I argued using Maha Bali's cake analogy, some learning experiences are best kept pure and un-augmented. But whether you decide to invite AI along on the journey or leave it behind, include your students in these conversations as I discussed in this post.

If you do allow AI, model its use and talk with students about why. Don't just give students the green light—show them the map. Walk through a few example prompts together and explain why they work: What makes a prompt clear? What context does the AI need? Where might it go off track? You can even use AI itself to draft initial prompts, then revise those with your students in real time like I shared here.

This kind of guided modeling transforms the assignment from a solo race into a shared expedition—one where students are more likely to ask better questions, stay curious, and engage responsibly. Like any good fellowship, everyone has a role to play, wisdom to contribute, and responsibility for the success of the journey.

The deep breath before the plunge isn't about fear—it's about the pause that separates wandering from wayfinding. It's about choosing our path thoughtfully rather than being swept along by technological currents. As we stand at this familiar crossroads once again, we're not aimless wanderers anymore. We're experienced guides, ready to lead our students on purposeful journeys toward learning that matters.

What’s New

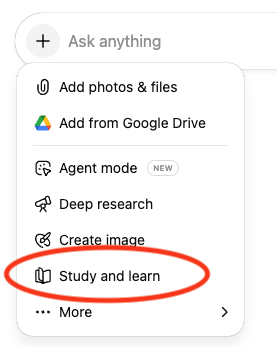

A popular topic at my spring and summer workshops was creating custom chatbots - Socratic-like tutors that would help students with specific classes without giving away answers. These could be built with custom GPTs in ChatGPT or commercial products like Magic School AI, which includes K-12 guardrails to keep younger users on task (no martini recipes, for example).

ChatGPT recently launched 'study mode' - a learning experience that guides students through problems like a tutor instead of just spitting out answers. While not perfect, it shows OpenAI is finally trying to address faculty concerns about students copying and pasting solutions. Of course, it still requires diligent students to use it effectively. And considering study mode gave me a decent martini recipe, they clearly need to work on those guardrails!

Lew Ludwig is a professor of mathematics and the Director of the Center for Learning and Teaching at Denison University. An active member of the MAA, he recently served on the project team for the MAA Instructional Practices Guide and was the creator and senior editor of the MAA’s former Teaching Tidbits blog.